Overview

Case workers play a crucial role in collecting and managing sensitive information about families and children participating in state, local, and federal government programs. However, current processes rely heavily on handwritten or typed forms that contain notes from case worker observations. This poses several challenges that impact efficiency, decision-making, and family outcomes:

- Information Loss: Valuable insights are buried in unstructured PDF forms, making it difficult to review multiple documents quickly and accurately.

- Overburdened Staff: Case workers are overwhelmed by manual, repetitive tasks such as reviewing forms and summarizing information.

- Resource Drain: Significant time and resources are spent on administrative work rather than focusing on high-impact interventions.

- Burnout for Families: At-risk families are frequently required to provide the same information multiple times, adding to their stress and leading to disengagement.

By leveraging the power of AI and Data Analytics within Databricks, there is an opportunity to dramatically improve how case data is processed, prioritized, and acted upon – ultimately enabling smarter, faster decisions for those who need it most.

Solution

Our end-to-end solution on Databricks includes the following components:

- PDF Parsing: Extracting structured data (checkboxes, notes, dropdowns) from unstructured caseworker forms using Large Language Models (LLMs) and Python tools.

- Synthetic Data Generation: Creating statistically relevant synthetic records to supplement real-world data for robust model training.

- ML Risk Level Classification: Training a machine learning model to categorize cases as Low, Medium, or High risk based on the extracted form data.

- RAG Chatbot: Deploying a Retrieval-Augmented Generation (RAG) chatbot using LangChain and Databricks Vector Search to query form data, especially unstructured notes.

- AI/BI and Dashboards: Building interactive dashboards to monitor trends, outcomes, and form metrics.

We’ll now dive deeper into each of these components to show how they were built and the impact they deliver.

1. PDF Parsing

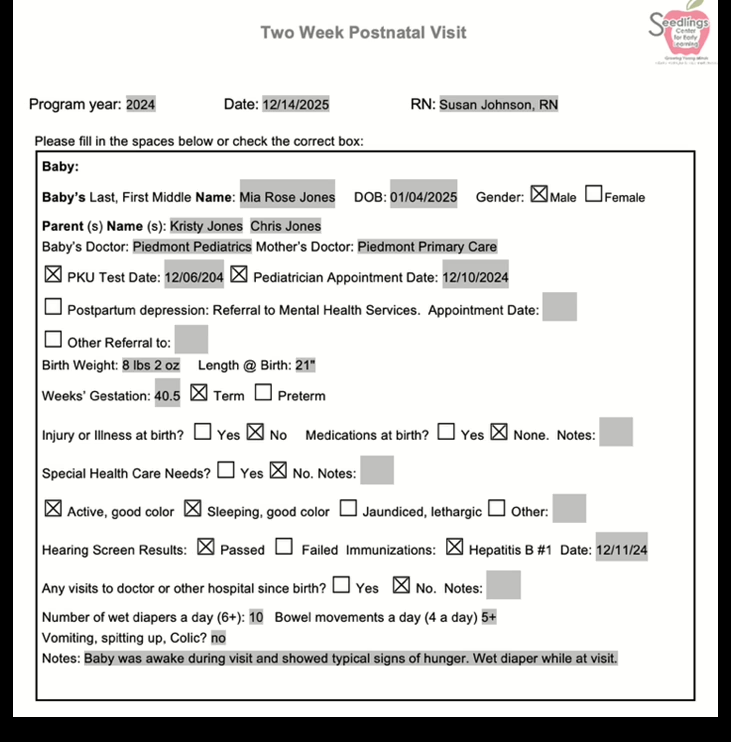

The image to the right shows an example of a Two-Week Postnatal Visit form completed with fictitious data. These PDFs contain complex unstructured elements such as checkboxes, drop-down menus, and free-text notes. To extract meaningful, structured data from these forms, we employed a combination of Python libraries and a Large Language Model (LLM):

- Pydantic (BaseModel): This Python library was used to define a schema that mirrors the structure of the form, enabling structured parsing.

- Unstruct’s LLMWhisperer: The PDF forms were passed to the LLMWhisperer API to extract raw text content from complex layouts and fields.

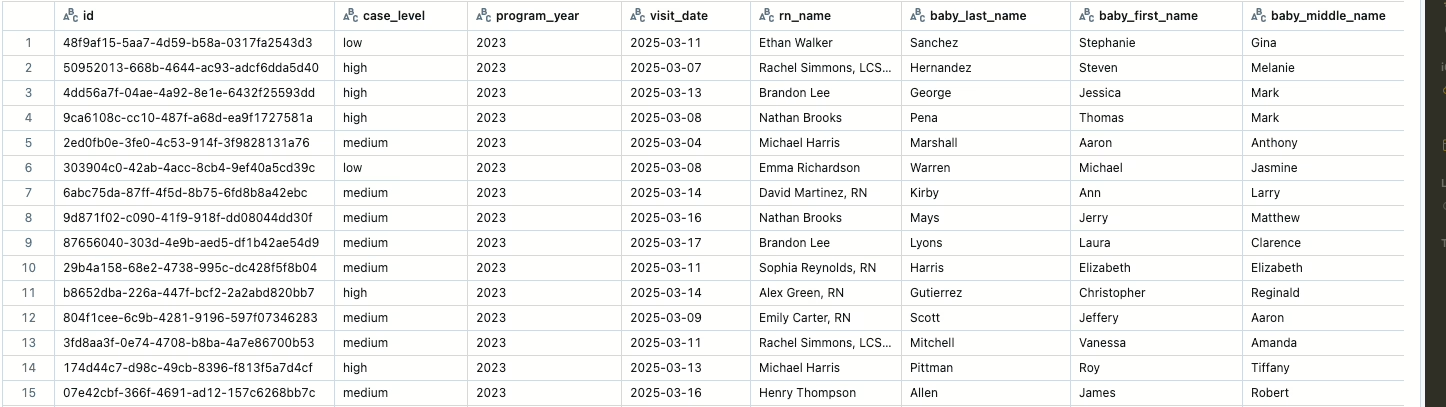

- GPT-4o with Structured Parsing: Using OpenAI’s structured output model, we converted the raw text into a structured DataFrame (Image 2). This DataFrame was then saved as a Delta Table in Databricks for scalable analysis and querying.

This approach allowed us to turn unstructured, hard-to-analyze PDF forms into clean, queryable datasets. This gave us the opportunity to unlock valuable insights from previously inaccessible information.

2. Synthetic Data Generation

To support both our machine learning model and the RAG (Retrieval-Augmented Generation) chatbot, we needed a sufficient volume of labeled and diverse data. However, the limited number of fictitious postpartum case forms made it challenging to build robust models and retrieval systems. To address this, we developed a synthetic data generator that produces statistically relevant data aligned with the patterns observed in the original samples. Our approach involved the following:- Controlled Randomization: Key fields such as program year, baby and mother dates of birth, case worker names, and referral statuses were randomly generated within logical boundaries to preserve real-world coherence.

- Text Variation: We injected controlled variability into the notes and concerns fields using predefined templates and randomized phrases.

- Batch Configuration: We used Databricks Jobs to orchestrate the synthetic data generation process at scale. These jobs allowed us to configure the number of records to generate per run, automate scheduling, and monitor execution all within the Databricks environment.

- Storage as Delta Table: Generated data was saved directly to a Delta table within Databricks, ensuring compatibility with downstream ML pipelines and RAG indexing workflows.

3. ML Risk Level Classification

To help case workers and supervisors quickly identify families needing urgent attention, we categorized the sample forms into three risk levels: High, Medium, and Low. These categories were based on a combination of the structured fields found within each form. Several key indicators contributed to the risk classification, including:- Signs of postpartum depression

- Repeated missed appointments

- Health flags from the baby’s postnatal records

- Quickly filter and surface high-risk cases for immediate review

- Reduce manual review time by highlighting only the most critical forms

- Support downstream analytics and dashboards by tagging records with consistent, model-driven risk levels

4. RAG Chatbot

To enable fast, natural-language access to the valuable information embedded in case forms, especially the free-text notes, we built a Retrieval-Augmented Generation (RAG) chatbot using Databrick’s Mosaic AI. This chatbot allows users to ask questions like “Which mothers showed signs of distress?” or “List high-risk cases without follow-up notes” and get answers in real time. The chatbot architecture leverages several integrated Databricks features:- Indexing with Databricks Vector Search: After preprocessing and chunking the form data, particularly the case worker notes, we used the Databricks BGE embedding model to convert each text chunk into semantic vectors. These embeddings were then stored in a Mosaic AI Vector Search index, enabling efficient similarity-based retrieval. This setup allowed the RAG chatbot to retrieve the most relevant context for each user query with high accuracy and low latency.

- LLM-powered Responses with DBRX: For generating answers, we used Databricks’ DBRX model, a powerful large language model designed for enterprise-grade performance and cost efficiency. This model synthesizes the retrieved chunks and crafts contextual answers grounded in the source material.

- Model Logging with MLflow: The RAG pipeline was packaged and logged using MLflow, enabling version control, metadata tracking, and reproducibility.

- Model Serving via Unity Catalog: The chatbot was deployed as a registered model in Unity Catalog, allowing secure, scalable serving across the organization with role-based access controls.

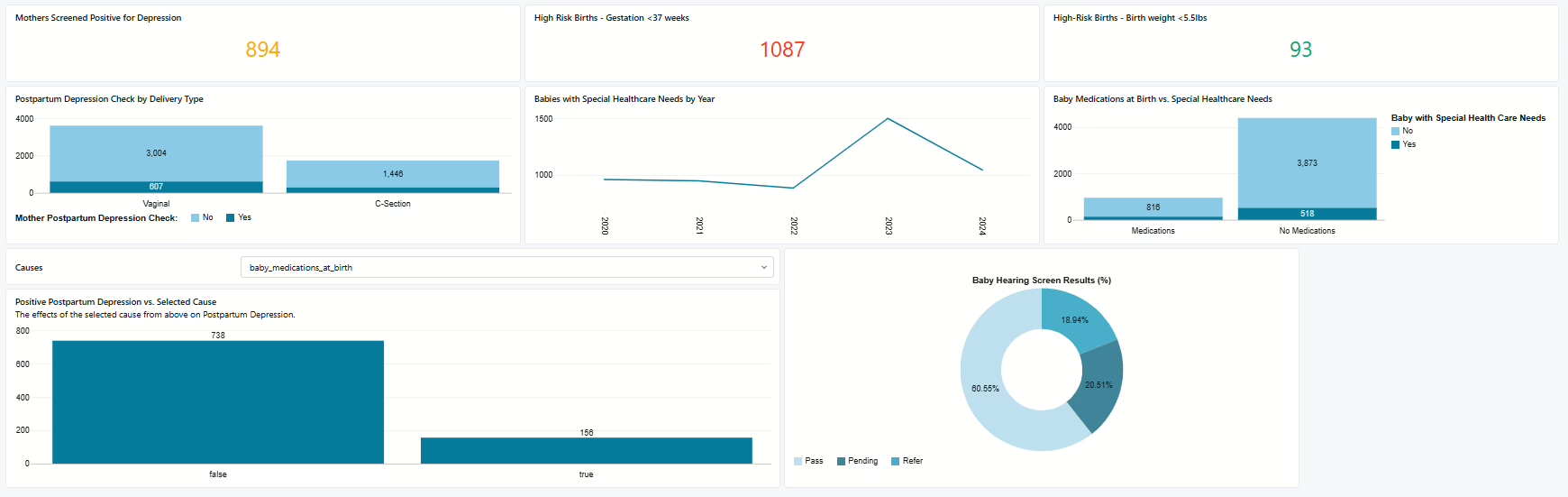

5. AI/BI and Dashboards

To monitor trends, outcomes, and key metrics from caseworker forms, we developed interactive dashboards using Databricks SQL. These dashboards enable us to quickly identify high-risk cases and provide stakeholders with built-in filters to explore specific areas of interest. We incorporated a variety of key performance indicators and dynamic visualizations to reveal trends over time and surface actionable, data-driven insights. By leveraging Databricks SQL, we streamlined our reporting process and empowered stakeholders to make informed decisions with real-time data The Dashboards leverage several integrated Databricks features:- Dynamic graphs and charts that update in real time based on selected filters and data sources.

- Row and column-level security to anonymize sensitive data such as names and personally identifiable information (PII).

- Granular permissions that allow users to access all or specific subsets of data based on their role or department.

- SQL queries and custom visualizations tailored to stakeholder needs for quick insights and trend detection.

- Interactive filters that enable stakeholders to drill down into specific areas of interest and explore different dimensions of the data.

- Automated refreshes of data, ensuring up-to-date information is always at hand for decision-making.

The Priority Insights tab highlights the most critical KPIs and charts, enabling users to quickly determine if immediate action is needed. Conditional coloring draws attention to urgent issues, making key insights stand out. This section also showcases the flexibility and robustness of Databricks dashboards, featuring a variety of chart types and a custom pick-list filter for tailored analysis.

The Casework Insights dashboard offers a comprehensive view of both individual caseworker performance and overall team trends. By highlighting form completeness for each caseworker, the dashboard helps the agency quickly identify where additional training may be needed or if a form design is causing confusion. Additionally, a column chart pinpoints caseworkers with lower form completion rates, which may signal potential burnout from excessive caseloads.

Conclusion

By utilizing Databricks’ unified Data Intelligence Platform for AI, BI, and ML solutions, we developed a comprehensive solution that transforms how child welfare programs manage, analyze, and act on case data. This platform allowed us to seamlessly integrate PDF parsing, synthetic data generation, risk classification, conversational AI, and interactive dashboards—all within a single, governed environment.

Want to Learn More?

Contact Infinitive for a discovery session or download our eBook to explore our migration methodology in detail.